Convolutional Neuronal Networks

Redes neruonales

Pros

- Modelo genérico

- Múltiples salidas

- Convergencia a los datos de entrenamiento

Redes neruonales

Contra

- Tardado de entrenear

- Sobre entrenamiento

- No incorpora intuiciones locales

El caso de imágenes

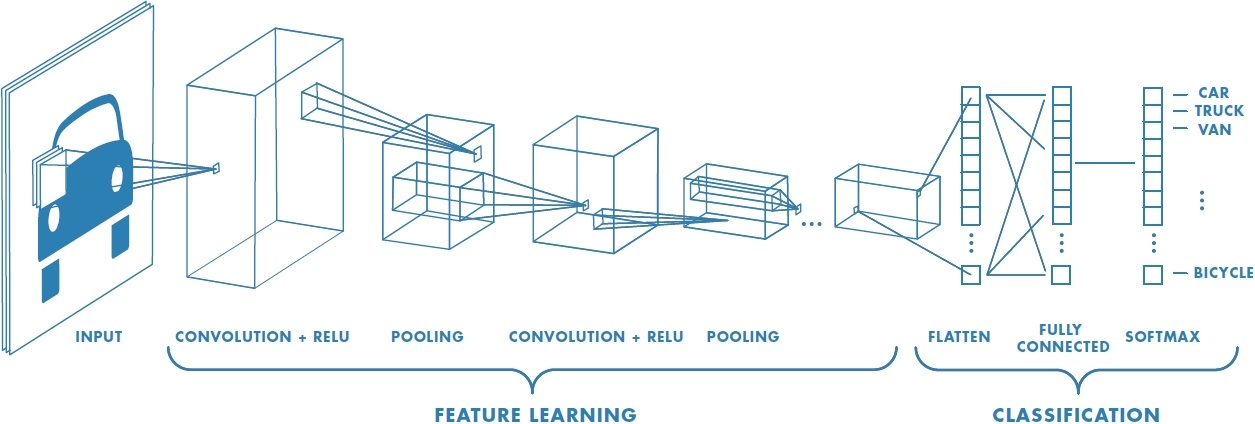

Redes convolucionales

- Capa convolucional

- Capa de max pooling

- Capa totalmente conectada (regular)

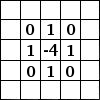

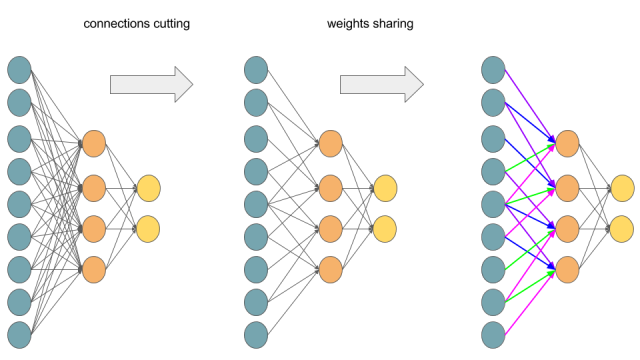

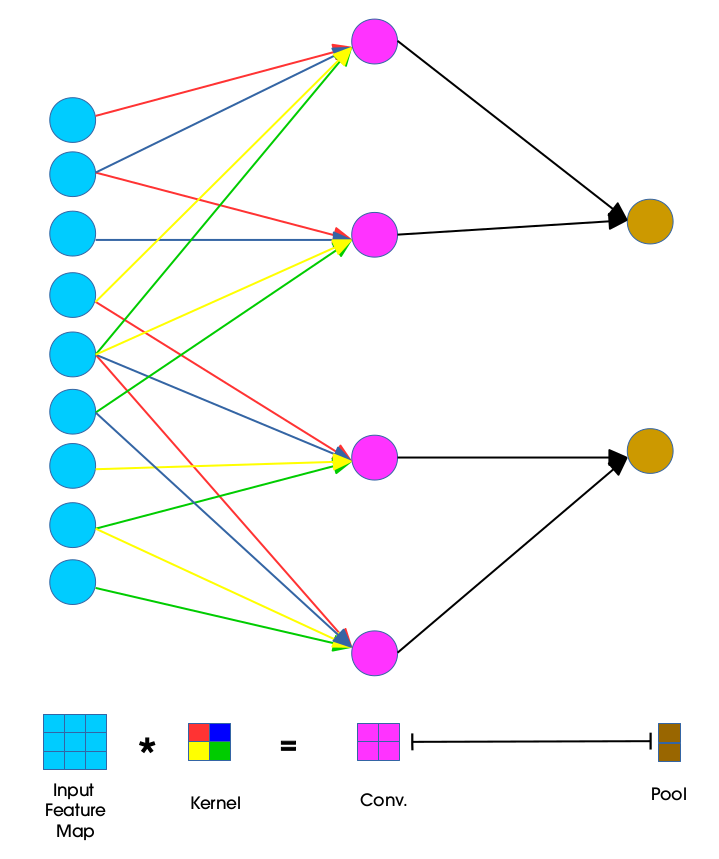

Capa convolucional

- Se basa en el concepto de filtro

Capa convolucional

- Salida: imágen filtrada

- Todas las neuronas comparten los pesos

Capa convolucional

Hiperparámetros

- Tamaño del filtro, cuadrado ($n \times n$)

- Número de filtros $k$

- Brinco (stride)

- Relleno (padding)

Capa convolucional

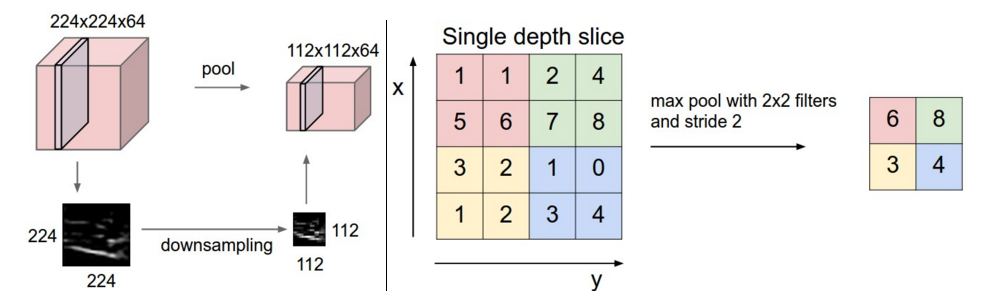

Capa max-pooling

Un filtro, del que se sacá el valor máximo

Hiperparámetros

- Tamaño del filtro, cuadrado ($n \times n$)

¿Cúantos parámetros?

Capa fully connected

Es una capa normal

Completa

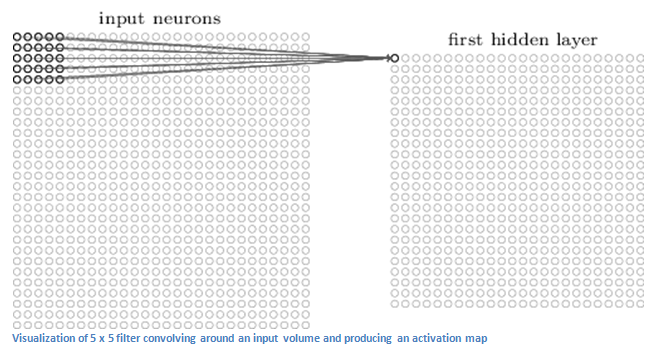

Forward propagation

Una red convolucional se parece mucho a una "fully connected"

Forward propagation

$$ \begin{align} z_{i,j}^l &= w_{m,n}^l \ast h_{i,j}^{l-1} + b_{i,j}^l \\ z_{i,j}^l &= \sum_{m} \sum_{n} w_{m,n}^l h_{i+m,j+n}^{l-1} + b_{i,j}^l \\ h_{i,j}^l &= f(x_{i,j}^l) \end{align} $$Capa convolucional + maxpool

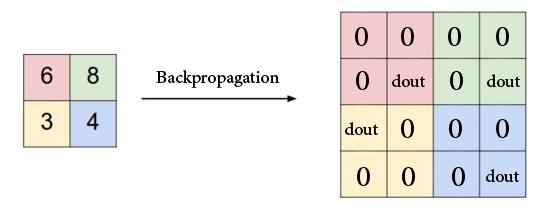

Foward propagation maxpool

Forward propagation maxpoll

$$ h_{i,j}^l = max(h^{l-1}_{ii+m,j+n}) $$BP Conv

La sensibilidad al error se propaga hacia atrás

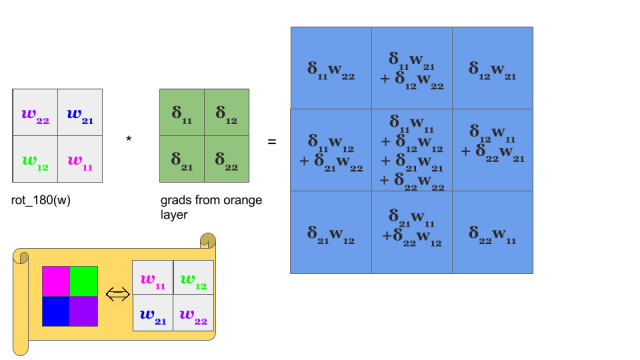

BP Conv

BP Conv

$$ \begin{align} \frac{\partial E}{\partial w_{i',j'}^{l}} = \delta^{li+1}_{i,j} \ast \text{rot}_{180^\circ} \left\{ w_{m,n}^{l+1} \right\} f'\left(x_{i',j'}^{l} \right) \ \end{align} $$BP maxpool

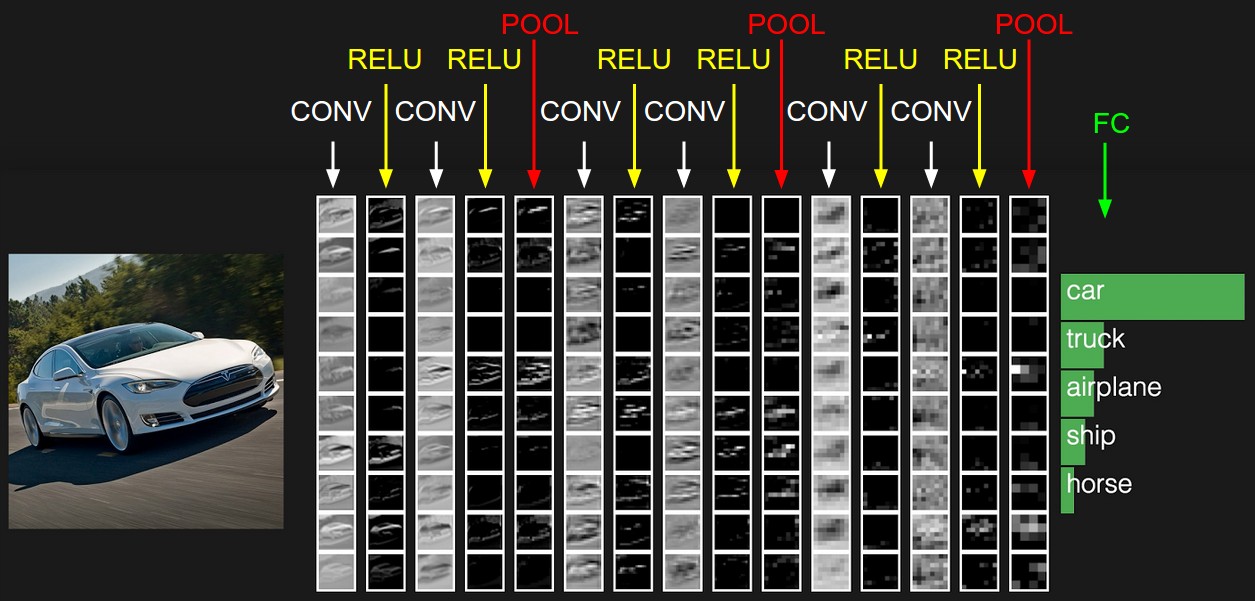

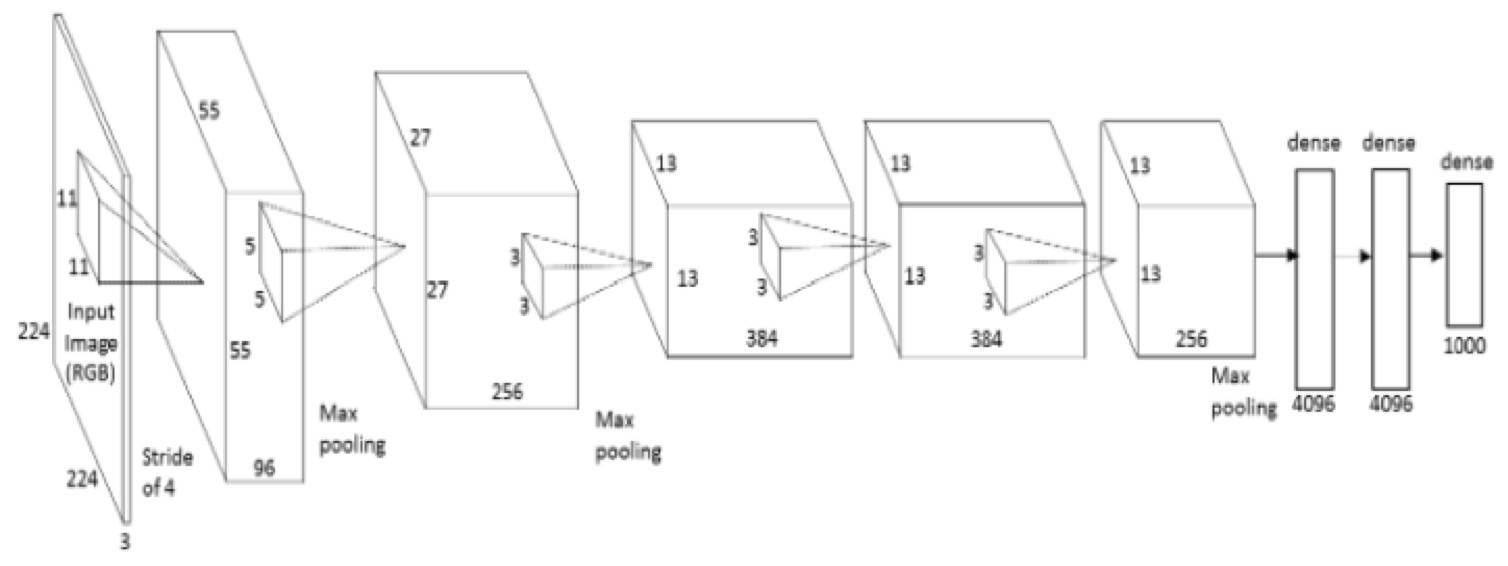

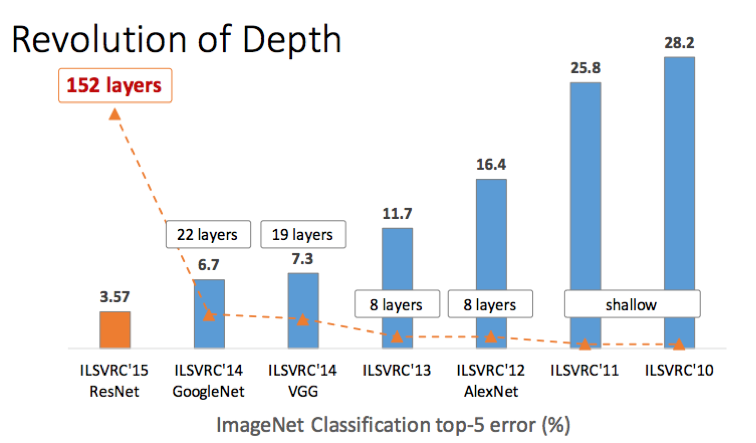

Notables redes

- LeNet, 1990

Alexnet

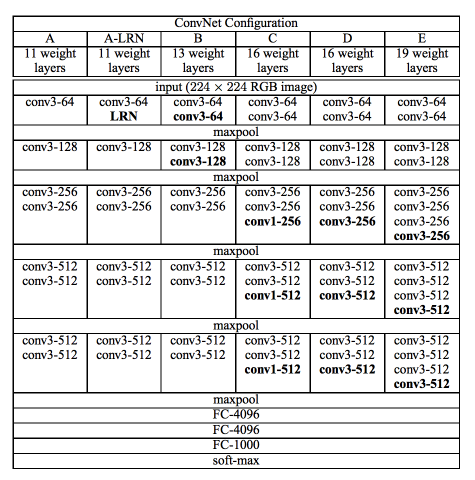

VGG16

La familia VGG

INPUT: [224x224x3] memory: 224*224*3=150K weights: 0 CONV3-64: [224x224x64] memory: 224*224*64=3.2M weights: (3*3*3)*64 = 1,728 CONV3-64: [224x224x64] memory: 224*224*64=3.2M weights: (3*3*64)*64 = 36,864 POOL2: [112x112x64] memory: 112*112*64=800K weights: 0 CONV3-128: [112x112x128] memory: 112*112*128=1.6M weights: (3*3*64)*128 = 73,728 CONV3-128: [112x112x128] memory: 112*112*128=1.6M weights: (3*3*128)*128 = 147,456 POOL2: [56x56x128] memory: 56*56*128=400K weights: 0 CONV3-256: [56x56x256] memory: 56*56*256=800K weights: (3*3*128)*256 = 294,912 CONV3-256: [56x56x256] memory: 56*56*256=800K weights: (3*3*256)*256 = 589,824 CONV3-256: [56x56x256] memory: 56*56*256=800K weights: (3*3*256)*256 = 589,824 POOL2: [28x28x256] memory: 28*28*256=200K weights: 0 CONV3-512: [28x28x512] memory: 28*28*512=400K weights: (3*3*256)*512 = 1,179,648 CONV3-512: [28x28x512] memory: 28*28*512=400K weights: (3*3*512)*512 = 2,359,296 CONV3-512: [28x28x512] memory: 28*28*512=400K weights: (3*3*512)*512 = 2,359,296 POOL2: [14x14x512] memory: 14*14*512=100K weights: 0 CONV3-512: [14x14x512] memory: 14*14*512=100K weights: (3*3*512)*512 = 2,359,296 CONV3-512: [14x14x512] memory: 14*14*512=100K weights: (3*3*512)*512 = 2,359,296 CONV3-512: [14x14x512] memory: 14*14*512=100K weights: (3*3*512)*512 = 2,359,296 POOL2: [7x7x512] memory: 7*7*512=25K weights: 0 FC: [1x1x4096] memory: 4096 weights: 7*7*512*4096 = 102,760,448 FC: [1x1x4096] memory: 4096 weights: 4096*4096 = 16,777,216 FC: [1x1x1000] memory: 1000 weights: 4096*1000 = 4,096,000 TOTAL memory: 24M * 4 bytes ~= 93MB / image (only forward! ~*2 for bwd) TOTAL params: 138M parameters

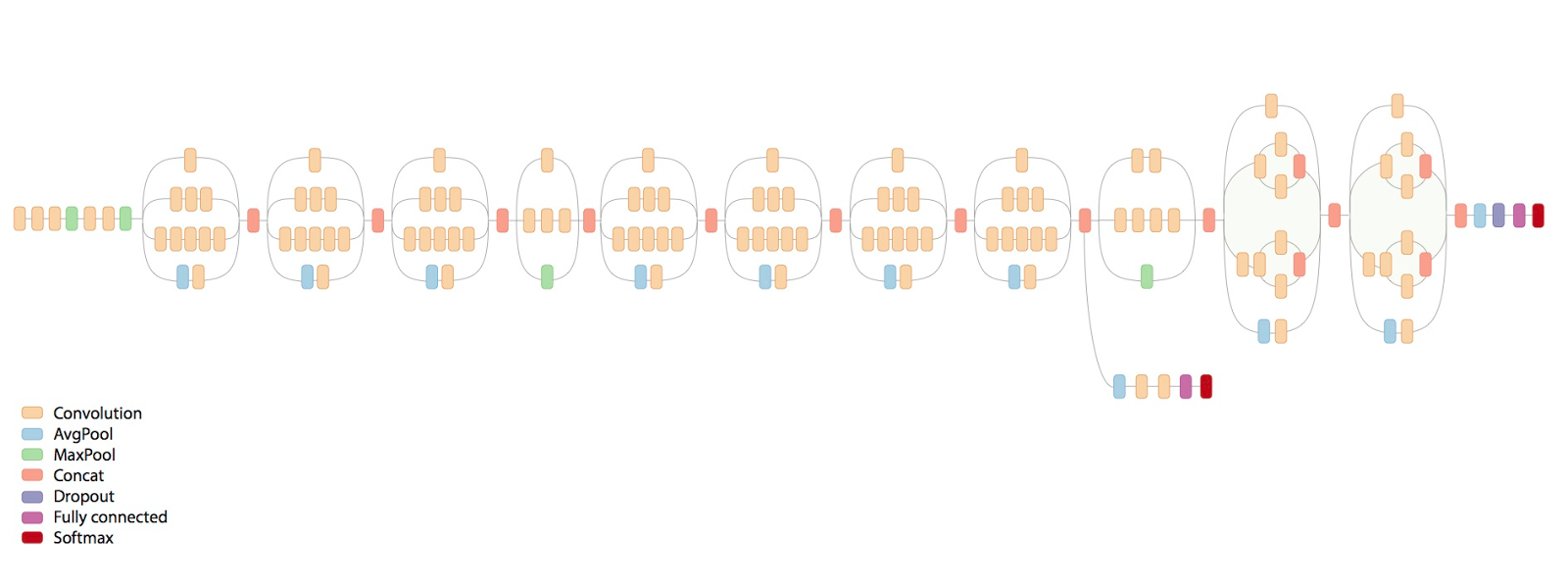

GoogLeNet

Inception V3

Deepdream

Resnet

Evolution

Clustering, reducción dimensional y visualización by Ivan V. Meza Ruiz is licensed under a Creative Commons Reconocimiento 4.0 Internacional License.

Creado a partir de la obra en http://turing.iimas.unam.mx/~ivanvladimir/slides/rpyaa/07_cluster.html.